Paper |

Code |

|

||

| Abstract: Objects manipulated by the hand (i.e., manipulanda) are particularly challenging to reconstruct from in-the-wild RGB images or videos. Not only does the hand occlude much of the object, but also the object is often only visible in a small number of image pixels. At the same time, two strong anchors emerge in this setting: (1) estimated 3D hands help disambiguate the location and scale of the object, and (2) the set of manipulanda is small relative to all possible objects. With these insights in mind, we present a scalable paradigm for handheld object reconstruction that builds on recent breakthroughs in large language/vision models and 3D object datasets. Our model, MCC-Hand-Object (MCC-HO), jointly reconstructs hand and object geometry given a single RGB image and inferred 3D hand as inputs. Subsequently, we use GPT-4(V) to retrieve a 3D object model that matches the object in the image and rigidly align the model to the network-inferred geometry; we call this alignment Retrieval-Augmented Reconstruction (RAR). Experiments demonstrate that MCC-HO achieves state-of-the-art performance on lab and Internet datasets, and we show how RAR can be used to automatically obtain 3D labels for in-the-wild images of hand-object interactions. |

||

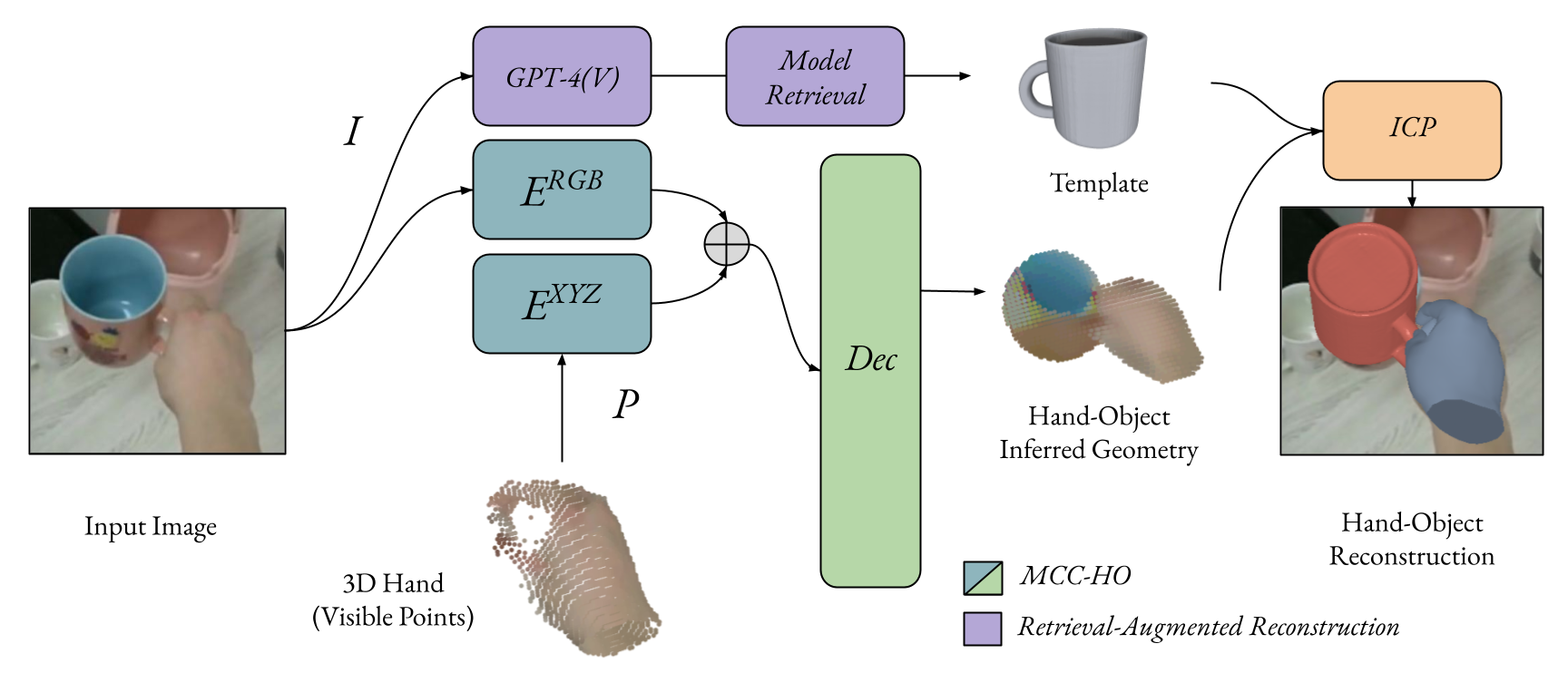

Method Overview

|

||

| Overview. Given an RGB image and estimated 3D hand, our method reconstructs hand-held objects in three stages. In the first stage, MCC-HO is used to predict hand and object point clouds. In the second stage, a template mesh for the object is obtained using Retrieval-Augmented Reconstruction (RAR). In the third stage, the template object mesh is rigidly aligned to the network-inferred geometry using ICP. |

MCC-Hand-Object (MCC-HO)

|

||

| MCC-HO test results. The input image (top, left), network-inferred hand-object point cloud (top, right), rigid alignment of the ground truth mesh with the point cloud using ICP (bottom, left), and an alternative view of the point cloud (bottom, right) are shown. |

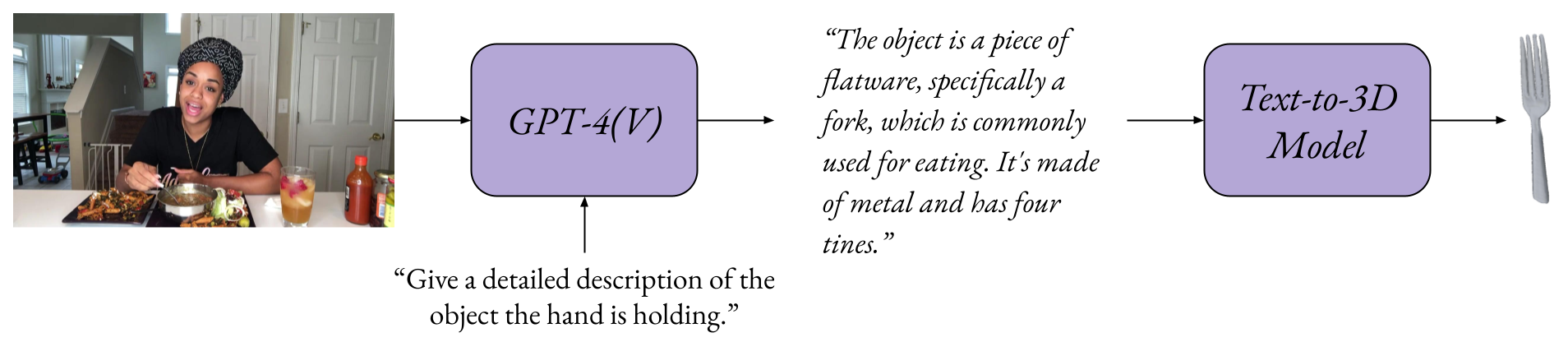

Retrieval-Augmented Reconstruction (RAR)

|

||

| Retrieval-Augmented Reconstruction (RAR). Given an input image, we prompt GPT-4(V) to recognize and provide a text description of the hand-held object. The text description is passed to a text-to-3D model (in our case, Genie) to obtain a 3D object. |

|

||

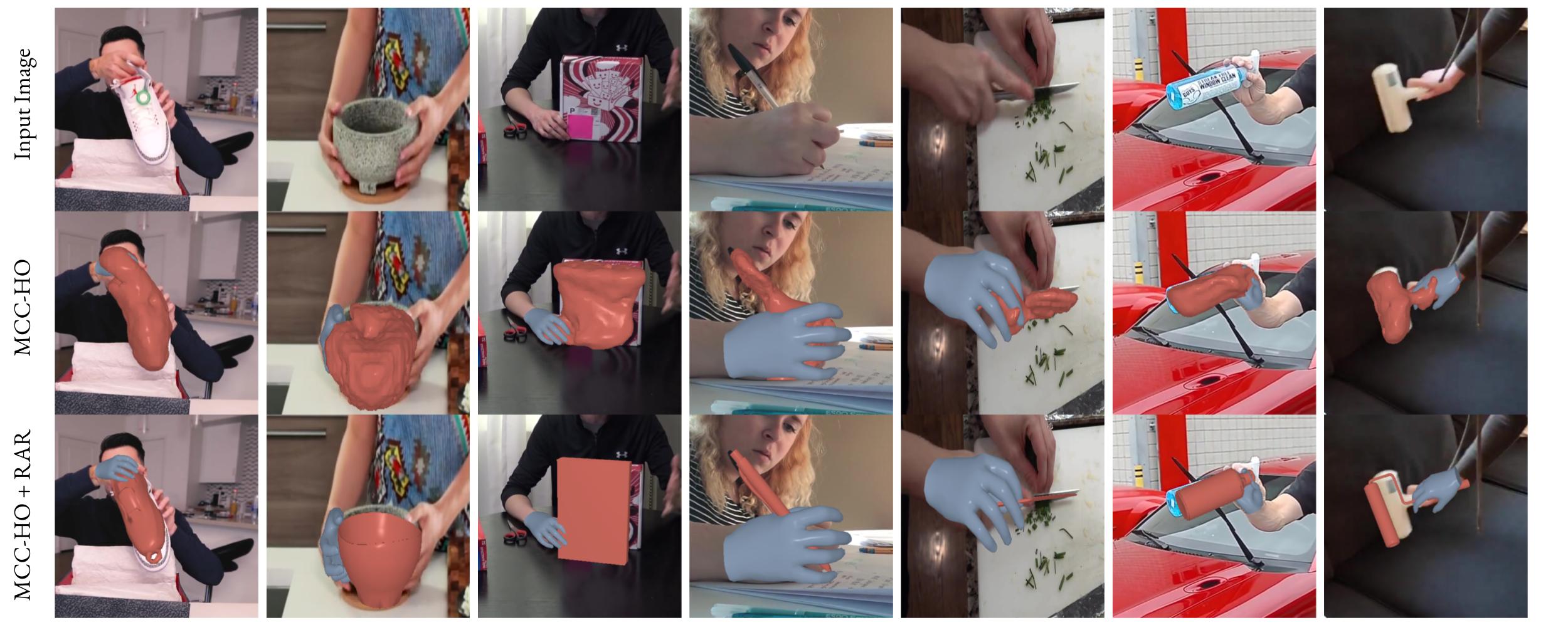

| MCC-HO + RAR. Top row: input images. 2nd row: MCC-HO results visualized as meshes. 3rd row: RAR (in this case, rigid alignment of the ground truth meshes) applied to the output of MCC-HO. |

Qualitative Results

|

||

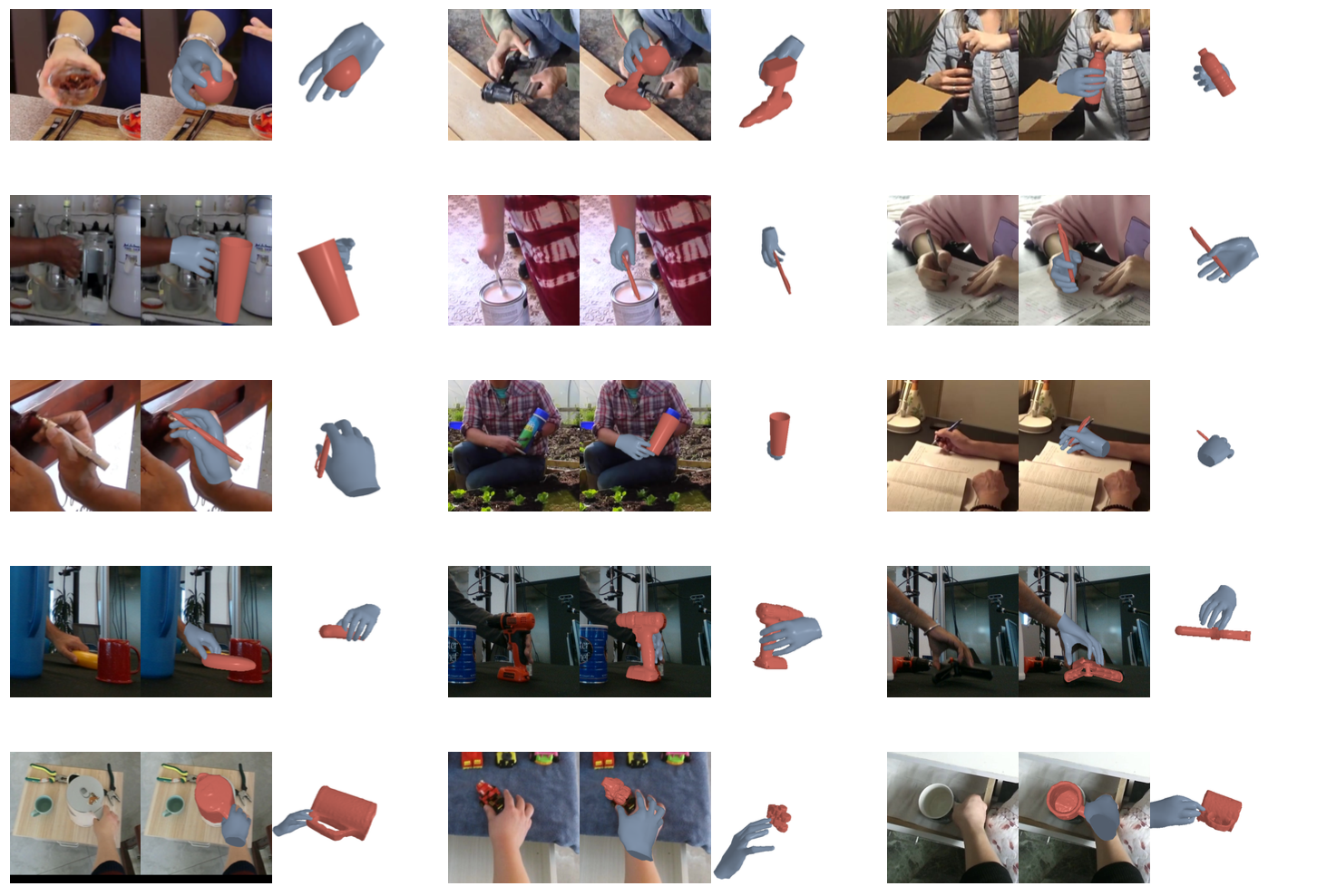

| Qualitative results. Our approach applied to test images from MOW (Rows 1-3), DexYCB (Row 4), and HOI4D (Row 5). Each example includes the input image (left), rigid alignment of the ground truth mesh with the MCC-HO predicted point cloud using ICP (middle), and an alternative view (right). |

|

||

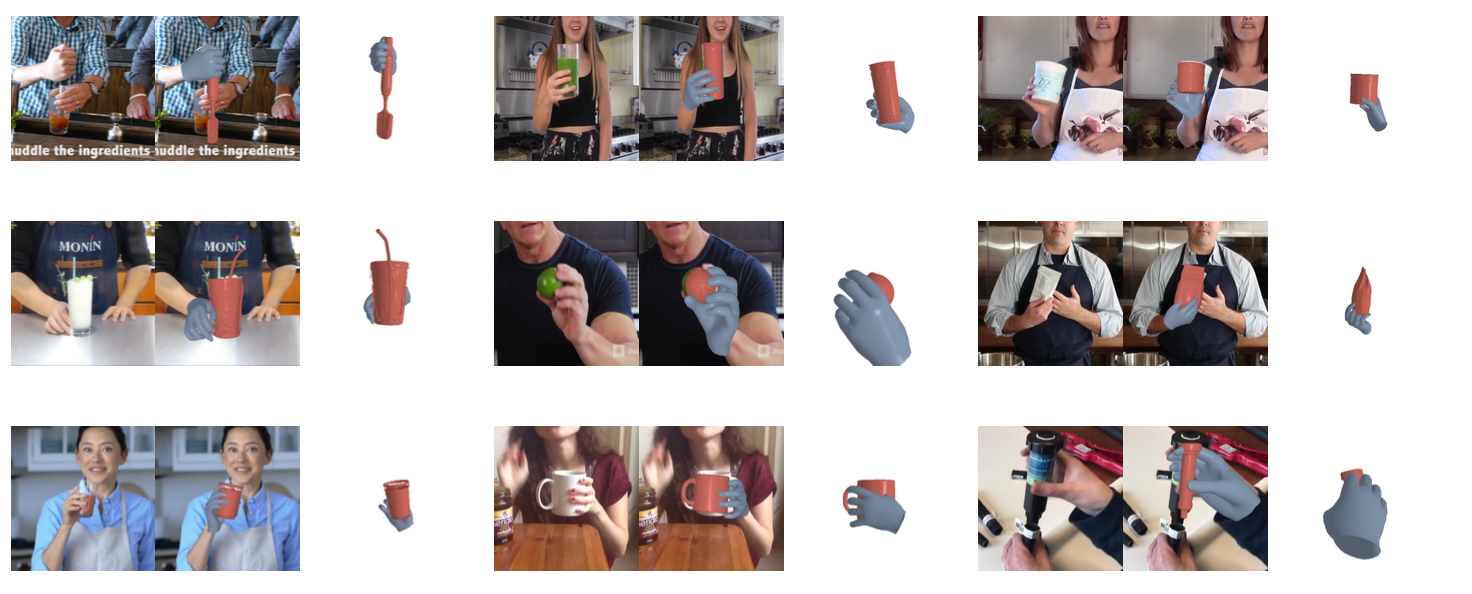

| Additional results using 100DOH. Our approach applied to images in the 100 Days of Hands dataset (no ground truth 3D labels). For each image, we estimate 3D hands using HaMeR, inference object geometry using MCC-HO, retrieve a 3D model using RAR (e.g., GPT-4(V) and Genie), and rigidly align the 3D model with the inferred point cloud from MCC-HO. |

Limitations

|

||

| Limitations. The input image is not used when running ICP, which can sometimes result in image misalignment errors (Row 1). A postprocess can be applied to resolve silhouette misalignment and any hand-object contact issues (Row 2). |

Acknowledgements |